Redefine your infrastructure through Virtualization

Companies frequently look out for methods to make use of technology to reduce costs and enhance productivity. As technology advances, it is crucial to get updated about new strategies that assist your firm in remaining competitive. If your domain is Information Technology (IT), you should be knowing about virtualization and how it might be used in your company.

In this post, we describe virtualization, explore its benefits, identify the different types of virtualization, present an example, and offer advice on how to pick a virtualization solution.

Virtualization defined

The technique of producing a virtual or digital replica of computer hardware is known as virtualization. It makes use of technology to establish an abstraction layer between a computer hardware system and its software application/Operating System. You may run many operating systems on a single computer at the same time by employing virtualization to emulate features traditionally associated with hardware programs.

This procedure is comparable to partitioning, which is used by IT experts to divide a physical server into numerous logical servers that may run operating systems and applications separately. Virtualization is also used by IT experts to develop software-based services such as servers, apps, networks, and storage space.

In more practical terms, assume you have three physical servers, each with a specific purpose. One as a mail server, another as a web server, and the third as a host for internal applications. Each server is only being used at roughly 30% of its full capacity, which is only a fraction of its total working capability. But, because the internal applications are still vital to your organization's internal operations, you must preserve them, as well as the third server that hosts them, right?

Historically, yes. Individual jobs on individual servers were frequently easier and more dependable to run: 1 server, 1 operating system, and 1 task. Giving one server multiple brains was not straightforward. However, using virtualization, you may divide the mail server into two distinct ones that can execute independent duties, allowing the applications to be moved. It's the same gear; you're simply making better use of it.

With balancing security and efficiency, you may divide the first server again to perform another job, increasing its utilization from 30% to 60%, and finally 90%. After that, the now-empty servers might be utilized for other tasks or retired entirely to save money on cooling and maintenance.

Virtualization - A brief history

While virtualization technology has been around since the 1960s, it was not widely used until the early 2000s.

Virtualization technologies, such as hypervisors, were created decades ago to provide numerous users concurrent access to machines that conducted batch processing. Batch processing was a popular corporate computer method that executed routine activities (like payrolls) thousands of times very fast.

However, various solutions to the multiple users/single computer conundrum gained prominence over the next couple of decades, whereas virtualization did not. Time-sharing was one of those other solutions, which segregated users within operating systems, unwittingly leading to other operating systems such as UNIX, which eventually gave rise to Linux. Throughout this time, virtualization remained a mostly untapped, niche concept.

Now fast forward to the 1990s. Most businesses used physical servers and single-vendor IT stacks, which made it impossible for older software to function on hardware from a different vendor. Companies were destined to underutilize physical hardware as they upgraded their IT infrastructures with less-expensive commodity servers, operating systems, and applications from a wide range of vendors—each server could only do one vendor-specific job.

This was the tipping point for virtualization. It was the obvious answer to two problems: businesses could split their servers and run applications on several operating system types and versions. Servers began to be utilized more efficiently (or not at all), lowering the expenses of acquisition, setup, cooling, and maintenance.

The broad use of virtualization aided in reducing vendor lock-in and established it as the cornerstone of cloud computing. It's so common in businesses nowadays that specialist virtualization management software is frequently required to keep track of everything.

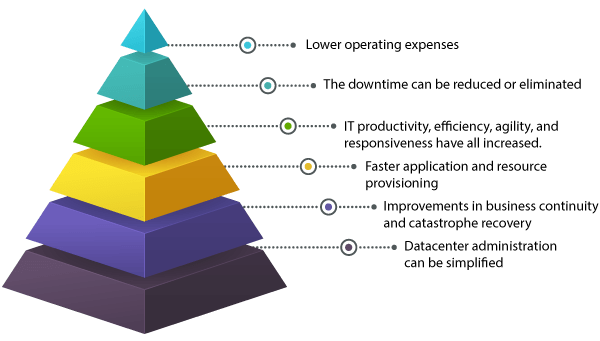

The Advantages of Virtualization

How Virtualization Works?

Virtualization separates an application, a guest operating system, or data storage from the underlying software or hardware. A hypervisor is a thin software layer that replicates the operations and actions of the underlying hardware for the abstracted hardware or software, resulting in numerous virtual machines on a single physical system. While the performance of these virtual machines may not be on pace with that of actual hardware-based operating systems, it is more than enough for most systems and applications. This is because most systems and applications do not make full advantage of the underlying hardware. When this reliance is removed, virtual computers (formed via virtualization) provide better isolation, flexibility, and control to their clients.

Types of Virtualization

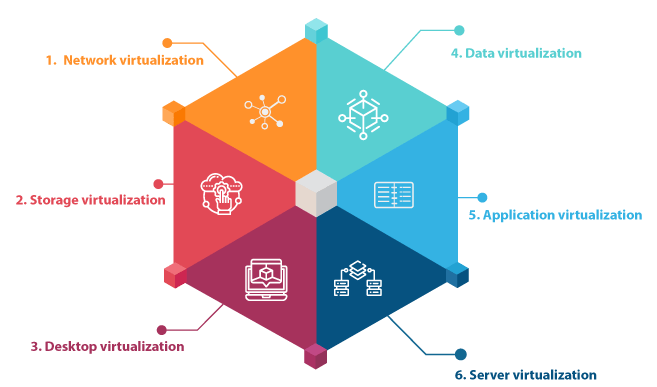

Initially, virtualization was primarily intended for server virtualization. However, the method's popularity has obliged its expansion and growth to cover networks, apps, data, desktops, and storage. We'll go through each kind briefly.

1. Network virtualization:

Today's communication networks are massive, ever-changing, and ever-increasingly complicated. As a result, their reliance on hardware is significant, making it an exceedingly stiff and costly structure to operate. Making changes to it, as well as launching new goods and services, takes time. Here is where virtualization comes into play. Network virtualization divides available bandwidth into channels, each of which is distinct and transportable. By dividing the network into manageable segments, virtualization conceals the underlying complexity of the network (thus allowing for changes to be made and resources to be deployed on those specific channels, instead of the entire network.)

2. Storage virtualization

Storage virtualization is the technique of combining physical storage capacity from numerous storage devices into one. A central console manages this single storage device. Storage virtualization reduces significant administrative expenses and allows businesses to better utilize storage.

3. Desktop virtualization

Rather than a server, a workstation is virtualized in this procedure. This enables the user to connect to the desktop remotely using a thin client. (A thin client is a low-cost endpoint computing device that is highly reliant on a network connection to a central server for processing.) Accessing the workstation is now easier and more secure because it is now operating on a data server. It also reduces the requirement for an Operating System license and infrastructure.

4. Data virtualization

A Data management strategy in which programs may obtain and alter data without the help of technical data. In essence, data retrieval and management may be performed without knowing where the data is physically stored, how it is formatted, or how it was obtained. It expands access by delivering a uniform and integrated view of corporate data in (near) real-time, as required by applications, processes, analytics, and business users.

5. Application virtualization

The abstraction of the application layer from the operating system. This enables the program to execute in an encapsulated manner, independent of the underlying Operating System. A Windows program can run on Linux thanks to application virtualization.

6. Server virtualization

Server virtualization is the practice of concealing server resources from server users. This process comprises information such as the number of servers, the identities of the users, processors, and operating systems running on a single server, and so forth. In this manner, the user is relieved of the requirement to comprehend and handle the complexities of server resources. The method also improves resource sharing and use while leaving room for future growth.

Additional Read

Virtualization of network functions

Network function virtualization (NFV) isolates the main operations of a network (such as directory services, file sharing, and IP setup) so that they may be spread across environments. Specific functions can be packed together into a new network and allocated to an environment once software functions are independent of the physical computers they originally existed on. Virtualizing networks minimizes the number of physical components required to construct several, independent networks, such as switches, routers, servers, cables, and hubs, and it is especially popular in the telecommunications sector.

Understanding Virtual Machines

Virtual computer systems are sometimes known as virtual machines, abbreviated as "VM." They have software containers that are tightly segregated and contain an operating system and applications. Virtual machines are entirely self-contained and autonomous. By running numerous virtual machines on a single computer, you may run various operating systems and applications on a single server at the same time. This server is also known as a host. The virtual machines are then decoupled from the host by a thin layer of software known as a "hypervisor," which allows them to interact with every virtual machine as needed.

How do virtual servers work?

What makes server virtualization possible?

Hypervisor software separates physical resources from virtual environments, which use those resources. Hypervisors can run on top of an operating system (like on a laptop) or on hardware (like on a server), which is how most businesses virtualize. Hypervisors split your physical resources so that virtual environments can utilize them.

Resources are partitioned from the actual environment to the various virtual environments as needed. Users interact with the virtual environment and conduct calculations within it (typically called a guest machine or virtual machine). The virtual machine is implemented as a single data file. And, like any digital file, it may be transferred from one computer to another, opened in either, and expected to function the same way.

When the virtual environment is functioning and a user or program sends an instruction that requires extra resources from the real environment, the hypervisor forwards the request to the existing system and collects the response— at a near-native speed.

- Type 1 is the most prevalent, in which the layer sits on the hardware and virtualizes the physical platform such that several virtual machines may use it.

- Type 2 hypervisor, on the other hand, creates isolated guest virtual machines using a host operating system.

Each virtual server replicates the functions of a dedicated server - on a single machine. Through root access, each server is subsequently assigned a unique and distinct operating system, software, and restarting provisions. Website administrators and ISPs can have independent domain names, IP addresses, analytics, logs, file directories, email administration, and more in a virtual server environment. Security mechanisms and passwords operate independently, just as they would in a dedicated server environment.

Why migrate your virtual environment to Proinf?

Because a choice like this is about more than simply infrastructure. It is all about what your infrastructure can (and cannot) do to support the technologies that rely on it. Being legally obligated to an increasingly expensive vendor limits your capacity to invest in cutting-edge technology like clouds, containers, and automation solutions.

However, our open-source virtualization solutions are not bound by ever-expanding enterprise-license agreements, and we provide complete access to the environment. So nothing is stopping you from adopting Agile practices, installing a hybrid cloud, or experimenting with automation tools.

Key Points to summarize

- Virtualization allows a single physical computer to function as if it were numerous computers.

- Among the many benefits, the technique cuts both immediate and long-term costs.

- It benefits in avoiding the underutilization of resources.

- Virtual Private / Dedicated Server, Desktop virtualization, Database or Storage virtualization, and Cloud Computing are all direct or indirect benefits of the technology.